One of the new features added to Cohesity DataPlatform in 4.0 is Erasure Coding, or EC in short. Basically, EC is a mathematical technique that stores a piece of data across N disks such that the loss (Erasure) of a few of the disks still allows the data to be reconstructed.

In Cohesity’s architecture, disks reside within hyperconverged nodes that comprise a cluster. EC blocks are distributed across nodes (not just disks) in the cluster to maximize both resilience and performance.

Without going deep into the mathematics, here’s a simple example:

Suppose you wanted to store two pieces of data A and B. You store A on node X, B on node Y and (A+B) on node Z. Now if we lose node X, we can still recover A by reading from node Y and node Z and doing (A+B) – A => B

In this simple example, we are able to tolerate 1 node failure with only 50% overhead.

There are other mathematical techniques to do this for higher failure tolerance (e.g., 2 node failures) and lower storage overhead.

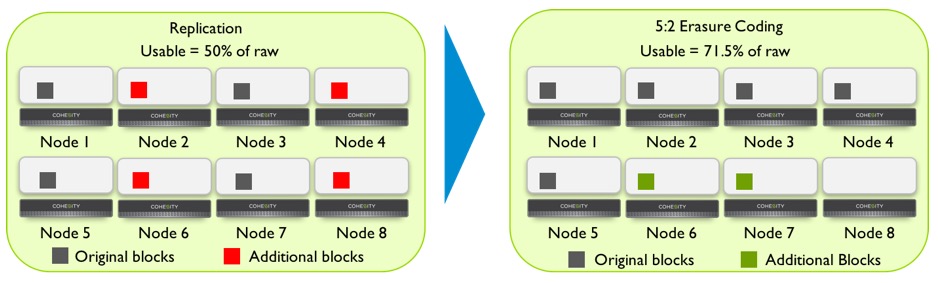

Here’s a simple visual comparison of usable capacity for RF=2 replication compared to 5:2 erasure coding:

This flexibility offers several advantages:

- Old data can be migrated to EC in the background. Customers don’t need Rip & Replace to realize the benefits!

- Some workloads (e.g., T&D) may still want to use Mirroring in their ViewBox for better performance.

- Delayed Erasure Coding – data can be ingested at higher throughput with Mirroring, and older, cold data can be Erasure coded to realize the capacity benefits.

One of the interesting challenges in adding EC to Cohesity was that Cohesity supports industry standard NFS & SMB protocols. This means that data can be overwritten, or we might get smaller writes than we’d like and we still have to make a distributed transaction work across many nodes and disks. Small writes can cause packing inefficiencies – causing us to use more storage than desired because we may have to pad writes. Here, our flexible approach paid rich dividends. For smaller writes, we simply write them mirrored and erasure code the data once enough of them have accumulated!

As the size of Cohesity clusters grows larger, we expect most customers will adopt erasure coding into their environments to realize higher fault tolerance as well as lower storage overheads.

With our newest 4.0 release, we’re thrilled to offer our users the flexibility to not only choose the optimal storage technique for their environment, but also the ability to transparently change at any time.